Passions

(not a post about Spike’s favourite soap opera)

As an INTP, I generally try to make “rational” decisions — which is to say, ones I can rationalise and explain and logically support. That in turn is something I can rationalise, explain and support: I’m fairly good at logic, and I’ve been taught lots of ways of analysing problems that people have discovered over centuries, which helps make better decisions. But the counter-argument to that is that it’s still easy to make mistakes, and mistaken logic can lead you to all sorts of bad ideas; a lot of deep, rational thought went into eugenics, for example. So for me, I like to keep a handle on that at least partly by trying to keep things aligned with my emotional response. If something doesn’t feel right, that’s a good time to look back through the logic, because there’s probably a mistake. If you can’t find a mistake, and that doesn’t make you feel better, it’s a good time to be cautious in other ways; if you do feel better, that probably means you’ve got a better understanding than you did before and definitely means you’ll be able to act on the ideas more effectively; and if you do find a mistake, well, you get a chance to fix it.

Going the other way — rationalising whatever you already feel — isn’t so effective to my mind; it’s often easier to make an apparently logical argument that’s actually wrong, than to work out how it’s wrong. That can be useful as a defense mechanism to rebuff challenges to what you want to do, but you can generally rationalise anything without much effort so it’s not actually adding information or improving your decision, and if you infer from the fact you’ve come up with a rationalisation that your decision is the only rational one to make, you can end up with a closed mind to better alternatives, leaving you with a worse decision than if you’d just continued going with your gut feeling. Personally, I tend to take that a fun game: take a completely subjective and illogical response to something (eg, “orange is the best colour”), then “logically” and “conclusively” prove it’s the only justifiable response. At the very least, it’s a good way to keep a little humility about the value of a good argument.

Logically investigating something (“I like orange. Hmm, is there some way to tell what the best colour is?”) you had a gut feeling about is a different matter entirely, of course — and as you think about it, if the analysis leads you to find something different to what you expected (“that’s odd, I think I just proved chartreuse is the best colour”), which might lead you to investigate different definitions for your terms (“perhaps orange is best in some ways, and chartreuse is better in others”) if it doesn’t lead you to change your opinions (“oh. my. god. this chartreuse cape is to die for!”)

Of course, if you’re not naturally comfortable with coming up with logical arguments, or trained enough to do them well, you’ve probably got other, more personally appropriate, ways of coming up with decisions anyway, and maybe none of this applies. But hey, that’s not my problem.

The other advantage of keeping your feelings in accord with your thoughts is that it tends to be more motivating — “passionate” tends to be a decent description both of someone pretty emotional and of someone pretty motivated and active, and there’s no point to making good, rational, decisions without acting on them. In some respects, the more intense the emotion the better; it’s easy to want to quit doing something difficult that’s not immediately rewarding, no matter how logically you’ve convinced yourself that it’s a good idea, but it’s a lot harder to shake off broiling rage, true love, or abject terror, eg.

The trick, then, is if you’ve found something that inspires that sort of emotion, to make sure it’s working in the same direction as the goals you’ve carefully and logically examined. That can be really easy: if your primary emotion is that you care deeply about helping people, seeing someone who’s had a bad day or week or year get a break and maybe break a smile is a good way to keep yourself working in a charity or a hospital, if that happens to be what you want to do. But it’s often not — maybe you’re overwhelmed by anger at the stupid bureaucratic nonsense that’s getting in the way of your hospital helping people, or maybe you’d like to help out in a soup kitchen but you’re terrified of violence in the area.

But, at least sometimes, those can be harnessed too. “Use your anger” isn’t exactly “use the force”, but it still gets some 40,000 hits on google offering useful advice. My feeling (which I wish I’d had earlier than today, but oh well) is there’s probably similar ways to grab most of those emotions, and turn them into allies, rather than just trying to figure out ways of making them go away.

- frustration, anger, hate: Figure out exactly what it is that’s the deserving object of your ire, and find ways to harm it. There’s lots of entirely reasonable ways to hurt things: a death blow, divide and conquer, death by a thousand cuts, subversion and betrayal; and most entirely reasonable ways of contributing to the good of society can be rephrased into something that’s more acceptable to anger. Annoyed by ignorance on the Internet? Deal it a death blow by creating a site like Wikipedia or snopes; create a debating forum so ignorant people are fighting each other instead of you, and maybe learning something as a result; contribute to Wikipedia or snopes or just help your friends avoid spreading urban myths; find a group of people who seem particularly ignorant, join them, become well-educated in their customs, befriend them, and then help them get access to all the knowledge they’ve been missing.

- worry, fear, terror: Be thorough. If you’re worried anyway, you’re going to naturally be thinking of every single way every single thing can go wrong, so take advantage of that and do something about each of those things you think of. Maybe it seems more rational to ignore your worries, and just charge ahead (and maybe it is), but there’s an equally good chance that will just make you worry more and make you less effective, whereas if you actually nervously go around making sure everything is absolutely perfect, you’re at least spending your time contributing to your goals. And every little thing you do fix up is one less thing to worry about, so it’s possible you might end up naturally less worried anyway. Probably not, of course…

- affection, appreciation, love: Dedicate your work, do it in appreciation, or in honour, and make it something that’s worthy of the object of your admiration, whether that be a person or an idea. It’s always tempting to cut corners or strive for something other than your absolute best, but much less so when what you’re doing is making a devout offering to something or someone you care about.

- pride, arrogance, narcissism: You think you’re the best, so do something that demonstrates it. Repeat.

- greed, envy, lust: Be a free-market capitalist — get more of what you want by doing more of what other people want.

- embarrassment, guilt, shame: Accept, apologise, and then do something worthwhile to atone?

- indifference, apathy, sloth: No idea. (Is this an emotion, or the absence of emotion? If the latter, find an actual emotion? If you can’t, just try to avoid watching Dexter for lifestyle tips?)

Anyway, there’s my thought for the day. YMMV. The following quote may or may not add support to the thesis presented:

Peace is a lie, there is only passion.

Through passion, I gain strength.

Through strength, I gain power.

Through power, I gain victory.

Through victory, my chains are broken.

The Force shall free me.

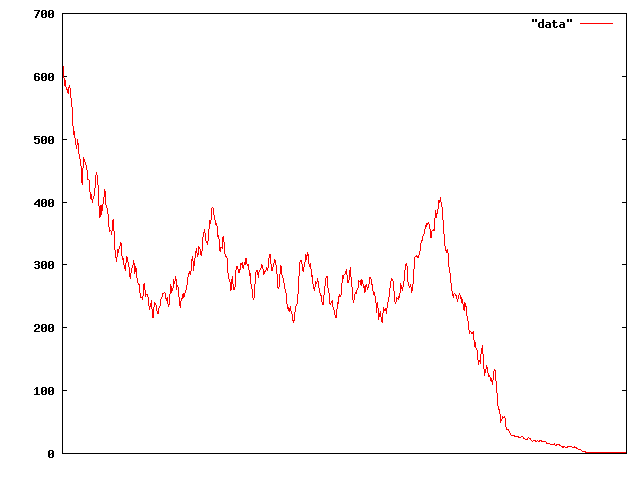

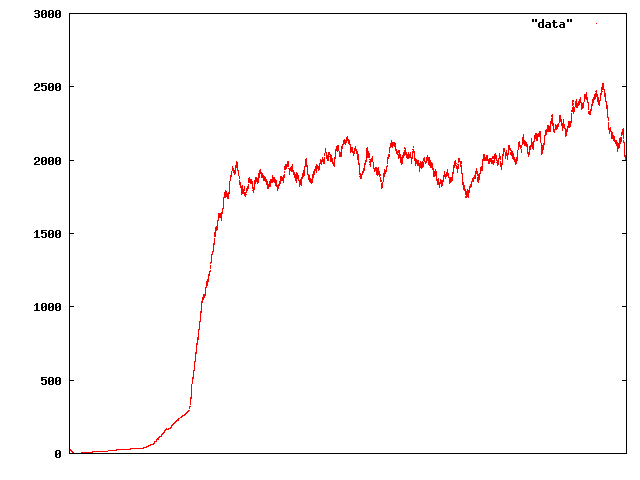

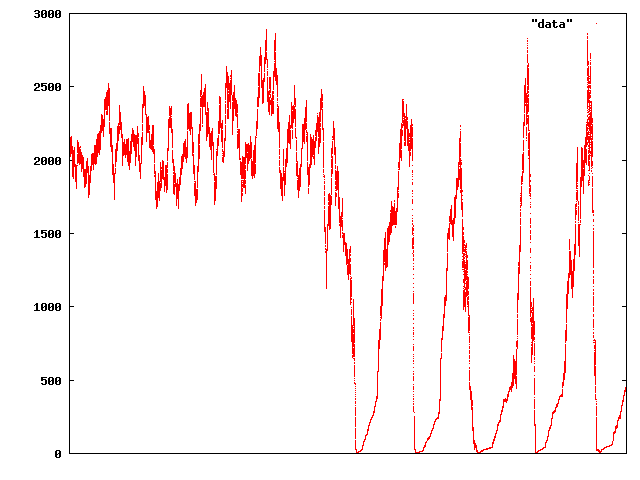

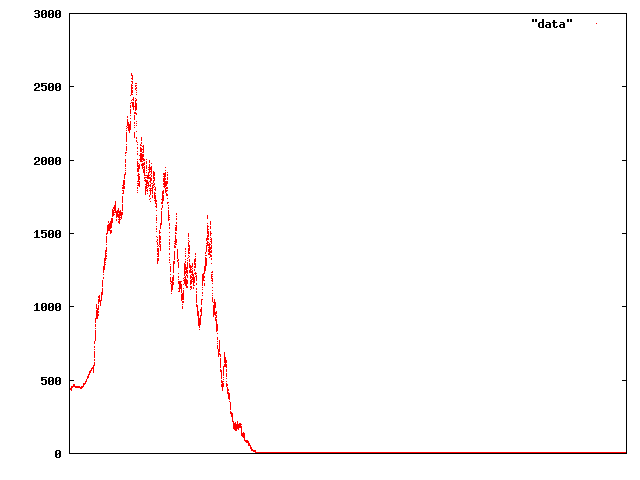

But after the crash, things bounced back okay and stayed pretty stable:

But after the crash, things bounced back okay and stayed pretty stable: That time series is twenty times as long as the first, so except for a bit of ups and downs, it looks like it might have actually stabilised at some sort of “fundamental” price. But sadly for our virtual traders, it turned out not:

That time series is twenty times as long as the first, so except for a bit of ups and downs, it looks like it might have actually stabilised at some sort of “fundamental” price. But sadly for our virtual traders, it turned out not: That time period’s about six times as long as the previous, so about 120 times as long as the initial crash snapshot. And it’s not really a market that looks very pleasant to participate in either — and going on just a little further ends up with what appears to be a permanent price crash:

That time period’s about six times as long as the previous, so about 120 times as long as the initial crash snapshot. And it’s not really a market that looks very pleasant to participate in either — and going on just a little further ends up with what appears to be a permanent price crash: The scale here is back to about the same as the initial recovery.

The scale here is back to about the same as the initial recovery.